Last Updated on 04/10/2025 by 75385885

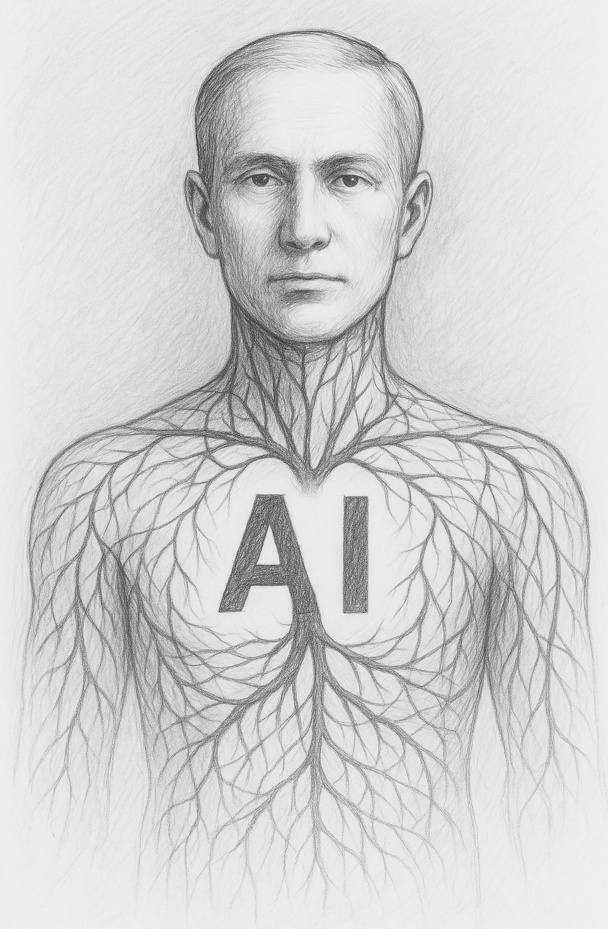

Introduction – When Magic Needs a Skeleton

AI and Corporate Governance – Artificial intelligence is often described in magical terms: algorithms that can see patterns invisible to humans, models that “learn,” systems that appear to reason. But magic is fragile. Without discipline, it vanishes at the first gust of scrutiny.

That is why governance is not a bureaucratic brake but the skeleton that holds the body upright. Without bones, an organism collapses; without governance, AI risks becoming a reflex with no direction.

And yet, a skeleton alone is not life. For an organism to live, it needs a brain that gives vision, muscles and nerves that allow movement, armor that protects against threats, and a heart that pumps oxygen through the system. The same is true of AI in organizations: only when ethics, systems, people, safeguards, and legitimacy all work together can AI be a force for progress.

This article explores the AI governance ecosystem/AI governance through that organism metaphor — brain, skeleton, muscles, armor, and heart — and draws on examples from companies around the globe.

The lesson is universal: AI is only as intelligent as the governance that animates it.

Read more on one of the most used Internal Control Frameworks from COSO – COSO Internal Control Framework: Lessons from Global Corporate Failures.

The Brain – Vision and Ethics

Every body needs a brain. In governance, the brain is vision: the place where purpose, ethics, and direction originate.

Fiction as Laboratory

Science fiction often plays the role of tomorrow’s ethics board. In Her, a man falls in love with an AI assistant. In Ex Machina, a humanoid robot manipulates her creator. These are not predictions; they are test cases that reveal what is at stake: consent, manipulation, and responsibility.

Boards should treat these narratives as laboratories of the imagination. They do not supply answers, but they ask the questions governance must address long before regulators catch up.

Vision Without Ethics – Philips and Wirecard

Philips positioned itself as a purpose-driven company, integrating circular economy and sustainability into its mission. Yet the massive recall of medical devices undermined that vision overnight. Trust is not secured by slogans but by operational discipline and transparency.

Wirecard illustrates the darker extreme: dazzling narratives of fintech success, underpinned by fabricated numbers. Vision without ethics becomes hallucination. AI, with its ability to generate convincing outputs, could make such hallucinations even more dangerous. Governance must be the frontal cortex that distinguishes imagination from delusion.

Fairness and Bias – Barclays and Aadhaar

Barclays introduced AI models in credit scoring. The outcome: biases encoded in historic data that disadvantaged vulnerable groups. Customers and regulators demanded explainability. Governance is the neural circuitry that detects when signals misfire.

India’s Aadhaar biometric identity system shows similar fragility on a national scale. Intended to promote inclusion, it also created exclusion where errors or design flaws locked citizens out of essential services. A brain that misroutes signals can paralyze the whole body.

Explainability by Design – Nubank and Tesco

Nubank, Latin America’s digital bank, uses AI for credit decisions. Its challenge is fairness and clarity: customers must understand why they are denied credit. Tesco faces a parallel issue: its Clubcard loyalty program uses AI to personalize offers, but without clear communication, customers may feel surveilled rather than served.

Explainability is like a nervous system in AI ethics and accountability: it must be built in from the start. Systems that cannot be explained cannot be trusted, and governance must insist that transparency is not retrofitted but designed.

Read more in our separate blog for a detailed article on The Brain of AI Governance – Vision and Ethics as the Seat of Corporate Judgment or read a article from The New York Times Rethinking ‘Checks and Balances’ for the A.I. Age.

The Skeleton – Operating Models and Technology

The skeleton gives structure and resilience. In AI governance, this is the operating model: the systems, data flows, and contracts that support innovation.

The IoT Washing Machine

A washing machine connected to energy markets and maintenance schedules is no longer just a household device. It becomes a vertebra in the digital skeleton. If it fails, insurers, grid operators, and households all feel the fracture.

Governance must ask simple but profound questions: Who owns the data? Who is responsible when systems fail? Even the smallest bones matter in holding up the skeleton.

Read the in-dept blog on The Washing Machine and the Internet of Things: A Governance Story.

ERP as Cathedrals – Siemens and Tesco

Enterprise systems are cathedrals: built slowly, meant to endure. AI adds stained glass windows: predictive analytics, anomaly detection, scenario planning. But cathedrals collapse if their foundations crack. Tesco learned this the hard way when ERP system failures contributed to misreporting scandals. Siemens, by contrast, demonstrates how to align digital infrastructure with long-term ESG goals. The skeleton can be a backbone or a brittle liability.

Safety and Redundancy – Airbus and NHS

Airbus uses AI for predictive maintenance in aviation. Governance here is literally life-saving: redundancy, audit trails, and regulator oversight are the ribs that protect vital organs.

The NHS provides another lesson. An AI tool for sepsis risk failed under real-world pressure, leading to late alerts. The skeleton cracked under stress. Governance must subject systems to pressure tests before they carry human lives.

The Joints of Governance

Bones without joints are rigid. AI governance requires connective tissue:

- Model risk management: every model tested and monitored.

- Vendor governance: contracts with audit rights and exit clauses.

- Data lineage: tracing information back to its source.

These ligaments make the skeleton flexible and strong. Without them, the system is brittle, prone to fracture.

Read more in our blog this metaphor on The Skeleton of AI Governance – Operating Models and Technology or from the AI Alliance on LinkeIn the operating model in: The AI Operating Model: Blueprints for Scaling Without Chaos.

The Muscles and Nerves – Labour and Profession

Muscles provide strength, nerves provide sensitivity. In organizations, these are people, skills, and culture.

AI and the Legal Profession

In London’s legal market, AI now reviews contracts faster than teams of junior lawyers once could. Efficiency improved, but the career ladder weakened: without repetitive work, how do juniors learn judgment? Governance must ensure that AI augments rather than erases learning muscles.

Soft Controls and EQ

Muscles without nerves spasm. In governance, the nerves are culture and emotional intelligence. AI can provide data, but it cannot provide empathy. Boards must ensure that EQ remains central: it is the nervous system that prevents muscles from tearing.

Doctors Versus Black Boxes – NHS

When doctors resisted black-box diagnostic tools, they were not rejecting innovation. They were signaling that accountability requires understanding. A professional cannot ethically sign off on a diagnosis they cannot explain. Governance must listen to these nerve signals.

DPIA as Physiotherapy

A Data Protection Impact Assessment is like physiotherapy: a disciplined routine that keeps muscles and nerves agile. Running hypothetical DPIAs on AI systems reveals weaknesses before injury occurs. Governance is not just about building strength; it is about preventing paralysis. DPIA is a creation of the GDPR (European Union).

Read more on this subject in our blog The Muscles and Nerves of AI Governance – Labour, Profession and Sensitivity or read on AI from an employee’s perspective by De Unie (a Labor union) in this article: Impact of AI on the labor market: threat or opportunity?

The Armor – Regulation and Responsibility

Armor protects, regulates, and filters. In AI governance, this is the role of law, oversight, and accountability. A part of the metaphor on corporate AI governance.

Cybersecurity as Board Duty

Cyber threats are no longer an IT problem but a board-level responsibility. Directors who neglect this are like soldiers leaving the shield at home. Armor must be carried by those in command.

Shield or Straitjacket

Regulation can be protective or suffocating. GDPR in Europe was seen as a bureaucratic burden, yet it saved many firms from reputational ruin. In AI, regulation must be adaptive: too heavy, and it paralyzes innovation; too light, and it fails to protect.

Audit Trail as Immune System

The body’s immune system remembers infections. Audit trails perform the same role: recording AI decisions so mistakes are not repeated. Organizations without audit trails are like bodies without immunity: one virus can cause collapse.

Checks and Balances

Armor works when roles balance: boards set direction, auditors verify, regulators enforce, and stakeholders question. Together they create an immune system that adapts, remembers, and protects.

Read more on this subject in our blog The Armor of AI Governance – Regulation and Responsibility or the legal context in Europe on respect of the EU AI Act: first regulation on artificial intelligence.

The Heart – Society and Stakeholders

The most powerful skeleton, brain, and armor cannot save a body without a beating heart. For AI governance, the heart is legitimacy.

Trust in Public Institutions – NHS

The NHS shows how fragile trust can be. AI errors in patient triage did not just harm individuals; they shook public confidence in an institution woven into the national identity. Governance must act as a cardiologist: constantly monitoring the heartbeat of trust.

Consumer Trust – Tesco

Tesco’s customer loyalty programs depend on data, but data without trust is toxic. Customers who feel exploited withdraw, and the heart skips a beat. AI must therefore be designed as a circulatory system: pumping value back to the customer, not just extracting it.

Finance and Fairness – Barclays and Nubank

Barclays in the UK and Nubank in Brazil both faced the same challenge: can AI in credit decisions be fair? If customers feel judged by invisible, biased systems, the bloodstream of legitimacy clots. Transparency and fairness are the oxygen that keeps financial systems alive.

Society as Oxygen

Stakeholders — employees, customers, regulators, investors — are the blood vessels. They carry oxygen throughout the system. If blocked, the body suffocates. Governance ensures that the heart keeps pumping legitimacy, so that every organ receives trust.

Read more on this subject in our blog The Heart of AI Governance – Society and Stakeholders or concerns in respect of the use of AI: a multistakeholder responsibility from Erasmus University Rotterdam – Institute for Housing and Urban Development Studies.

Conclusion – A Living Organism, Not a Checklist

AI governance is not a new checklist to be filed in a compliance drawer. It is an organism.

- The brain sets vision and ethics.

- The skeleton provides structure and resilience.

- The muscles and nerves turn vision into action with sensitivity.

- The armor shields and filters threats.

- The heart pumps legitimacy through the system.

When all five work together, AI strengthens the corporate body. When one fails, the entire organism falters.

The lesson is simple and universal: AI will not govern itself. It will mirror the culture, systems, and responsibilities we build around it. The true measure of intelligence is not how fast an algorithm learns, but how wisely boards and stakeholders guide it.

FAQ on AI and Corporate Governance

AI and Corporate Governance

AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance AI and Corporate Governance

Why compare AI governance to a living organism?

Because it shows that no single part can work in isolation. Ethics, systems, people, rules, and legitimacy only function together. A brain without a skeleton collapses, and a heart without circulation dies. Governance for AI works the same way: every part must balance, or the whole fails.

How can boards ensure AI ethics are more than slogans?

By insisting that AI produces advice that is transparent, testable, and documented. Ethics become real when directors can ask: Why did the system suggest this? Who checked it? Only when people validate the AI’s output and record the reasoning does governance move from rhetoric to practice.

What role do employees play in AI governance?

Employees are the true decision-makers. AI delivers analysis and suggestions, but people validate the output, apply judgment, and document why a decision was made. This ensures accountability: faster choices, but always with humans in control.

Is regulation a help or a hindrance for AI innovation?

Good regulation functions like an immune system: it protects the body while still allowing growth. Clear rules on accountability and documentation may slow things down slightly, but they also build trust. Without them, AI risks creating faster mistakes instead of better outcomes.

Why is stakeholder trust described as the heart of AI governance?

Because no system survives without legitimacy. Customers, regulators, investors, and employees need confidence that AI supports fair, responsible decisions. If they lose trust, the entire governance organism suffocates, no matter how advanced the technology.

What global examples show AI governance in practice?

Barclays had to address bias in lending models, Tesco manages privacy in customer analytics, and the NHS must prove AI can be safe in diagnostics. In each case, governance meant the same thing: AI provides the input, but people take responsibility for the decision and explain it afterwards.